Remix + Plausible Analytics: Resolving Proxy Issues

Adding Analytics which works with Adblockers, solving Content-Encoding and Duplex Errors when Proxying behind Cloudflare.

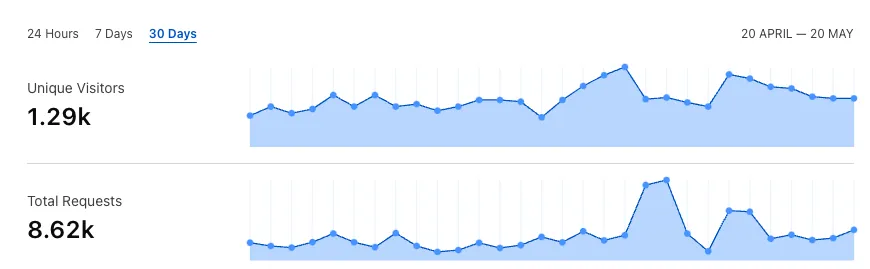

A few days ago I noticed that a side-project of mine was getting some traffic although I shut down the backend server long ago and never really marketed it, only a mere reddit post that did not get much attention.

The service was about translating Crystal Reports automatically. The setup of this project was intricate because CrystalReports is problematic legacy software that needs to run on the Windows OS which complicated deployment. It’s very finicky to get working correctly, requiring specific versions of dependencies and runtime environments. But this is a story for another time.

Intrigued by this unexpected traffic, I decided to revive the backend and add analytics to understand the source of these visitors and how they were using the application.

Plausible Analytics

Plausible Analytics is a lightweight, open-source alternative to Google Analytics that prioritizes user privacy. It doesn’t use cookies and is fully compliant with GDPR, CCPA, and PECR, making it increasingly popular among privacy-conscious developers. Unlike Google Analytics, Plausible collects only essential data without tracking users across sites or selling their data.

I have been using Plausible Analytics for some time now as a more privacy-friendly alternative to Google Analytics. There is also an open-source community version, which means you can self-host it on your server—which is also what I am doing. Hooking your site up is as easy as adding:

<script defer data-domain="yourdomain.com" src="https://plausible.io/js/script.js"></script>When self-hosting you would replace https://plausible.io with your server domain. The biggest drawback with this is that many ad-blockers will block this. To solve this you would normally proxy the network call as described in this blog post. For Next there is an npm package next-plausible that creates proxying middleware and for Remix you can just create routes. Namely,

export const loader = async () => { const plausibleScriptData = await fetch('https://yourdomain.com/js/script.js'); const script = await plausibleScriptData.text(); const { status, headers } = plausibleScriptData;

return new Response(script, { status, headers, });};This sends back the script with original headers, and then in root.tsx you just reference it

<script defer data-domain="website.com" src="/js/script.js"></script>Easy enough, right? Well in my case it worked in localhost but not when deployed. A classical vibe coder would be happy at this point.

Short sidenote. Plausible is actually disabled on localhost because normally you wouldn’t want these clicks in your Analytics but if you are testing you can fetch a modified version of the script that works locally by getting script.local.js instead of script.js.

Err 1: Content Decoding Failed

I deployed the Remix app on Vercel and when looking at the Network logs in the browser I got an ERR_CONTENT_DECODING_FAILED. Weirdly, it worked perfectly fine locally. It took me way too long to figure this one out but when looking at the full request in the terminal we see that there is a problem regarding the content encoding. The browser expects gzip content because of the Content-Encoding but fails to decode it.

http GET https://www.crystaltools.app/js/script.js

HTTP/1.1 200 OKAccess-Control-Allow-Origin: *Age: 25727CF-RAY: [CF-RAY-ID-REDACTED]Cache-Control: public, max-age=86400, must-revalidateCf-Cache-Status: HITConnection: keep-aliveContent-Encoding: gzipContent-Length: 776Content-Type: application/javascriptCross-Origin-Resource-Policy: cross-originDate: Mon, 19 May 2025 20:07:24 GMTLast-Modified: Mon, 19 May 2025 12:58:37 GMTNel: {"report_to":"cf-nel","success_fraction":0.0,"max_age":604800}Report-To: {"group":"cf-nel","max_age":604800,"endpoints":[{"url":"[CLOUDFLARE-REPORTING-URL-REDACTED]"}]}Server: cloudflareStrict-Transport-Security: max-age=63072000Vary: Accept-EncodingX-Content-Type-Options: nosniffX-Vercel-Cache: MISSX-Vercel-Id: [VERCEL-ID-REDACTED]alt-svc: h3=":443"; ma=86400

http: error: ContentDecodingError: ('Received response with content-encoding: gzip, but failed to decode it.', error('Error -3 while decompressing data: incorrect header check'))If we look at the content we see that it’s just plain text, and not gzipped.

curl -s https://www.crystaltools.app/js/script.js

!function(){"use strict";var a=window.location,o=window.document,t=o.currentScript,r=t.getAttribute("data-api")||new URL(t.src).origin+"/api/event",l=t.getAttribute("data-domain");function s(t,e){t&&console.warn("Ignoring Event: "+t),e&&e.callback&&e.callback()}function e(t,e){if(/^localhost$|^127(\.[0-9]+){0,2}\.[0-9]+$|^\[::1?\]$/.test(a.hostname)||"file:"===a.protocol)return s("localhost",e);if((window._phantom||window.__nightmare||window.navigator.webdriver||window.Cypress)&&!window.__plausible)return s(null,e);try{if("true"===window.localStorage.plausible_ignore)return s("localStorage flag",e)}catch(t){}var n={},i=(n.n=t,n.u=a.href,n.d=l,n.r=o.referrer||null,e&&e.meta&&(n.m=JSON.stringify(e.meta)),e&&e.props&&(n.p=e.props),new XMLHttpRequest);i.open("POST",r,!0%Which would make total sense if we look again at the code in js.script[.js].ts. We fetch the script from our plausible server and decode it with .text() but keep the headers as is. Thus, if the initial content is encoded with gzip our content-encoding will not match with the content in the Response we return.

Fair enough. We have multiple options to fix this. Either passing through the content unmodified using .arrayBuffer()

export const loader = async () => { const plausibleScriptData = await fetch('https://yourdomain.com/js/script.js'); const script = await plausibleScriptData.text(); const script = await plausibleScriptData.arrayBuffer(); const { status, headers } = plausibleScriptData;

return new Response(script, { status, headers, });}; // Using arrayBuffer() preserves the original binary data, maintaining compatibility with content-encoding headersor alternatively, we can override the headers to match the actual content format (uncompressed text)

export const loader = async () => { const plausibleScriptData = await fetch('https://yourdomain.com/js/script.js'); const content = await plausibleScriptData.text();

return new Response(content, { headers: { "Content-Type": "application/javascript", "Content-Encoding": "identity", // Explicitly states content is not encoded }, });};Well, in my case this was still not solving the problem. At this point I tried everything.

Turns out I had Cloudflare’s Proxy feature enabled, and Cloudflare initially was caching the request. So the first time where the content and headers were misconfigured Cloudflare cached the request, and returned the same cache hit.

http GET https://www.crystaltools.app/js/script.js

HTTP/1.1 200 OKAccess-Control-Allow-Origin: *Age: 25727CF-RAY: 9426386c4dbe9b37-FRACache-Control: public, max-age=86400, must-revalidateCf-Cache-Status: HITConnection: keep-aliveContent-Encoding: gzipContent-Length: 776Content-Type: application/javascript...So, you either disable proxying for the domain in your DNS settings in Cloudflare, or you purge the cache.

One weird thing I would like to mention. Cloudflare automatically applies compression to improve page load times and reduce bandwidth usage. If Cloudflare detects that content is suitable for compression, it will add the Content-Encoding: gzip header even if the original server didn’t compress the content. Which was happening in my case when I did not specify headers or just forwarded them. What solved the problem was to forward the content in plain text and define the headers:

export const loader = async () => { const plausibleScriptData = await fetch( "https://yourdomain.com/js/script.js" ); const content = await plausibleScriptData.text();

const { status } = plausibleScriptData;

return new Response(content, { status, headers: { "Content-Encoding": "identity", }, });};And don’t forget to purge the cache after deploying the code and having the Cloudflare Proxy active.

Err 2: The Duplex Error

If you followed the aforementioned blog post in setting up proxying you recall that we also proxy the event api of plausible. This means adding an additional route to Remix

import type { ActionArgs } from '@remix-run/node';

export const action = async ({ request }: ActionArgs) => { const { method, body } = request;

const response = await fetch('https://yourdomain.com/api/event', { body, method, headers: { 'Content-Type': 'application/json', }, }); const responseBody = await response.text(); const { status, headers } = response;

return new Response(responseBody, { status, headers, });};Here, I ran into 503: Unexpected Server Error in the browser. I had to debug it locally to get a more meaningful error message

TypeError: RequestInit: duplex option is required when sending a body.It turns out, the error message occurs because recent versions of Node.js (and by extension, environments like Vercel) now enforce a part of the Fetch API specification that requires explicitly setting the duplex option when a request includes a body. You can read up more here, I’ll just paste the important bits here:

So we can fix this issue by specifying that in our request:

import type { ActionFunctionArgs } from '@remix-run/node';

export const action = async ({ request }: ActionFunctionArgs) => { const { method, body } = request;

const response = await fetch('https://yourdomain.com/api/event', { body, method, headers: { 'Content-Type': 'application/json', }, duplex: "half", }); const responseBody = await response.arrayBuffer(); const { status, headers } = response;

return new Response(responseBody, { status, headers, });};Adding duplex: "half" satisfies the fetch API spec and fixes the error.

Debugging Advice

Proxying third-party scripts in modern web frameworks like Remix can introduce unexpected complexities, especially when CDNs like Cloudflare sit between your server and users. What seems like straightforward code can fail in production due to content encoding mismatches or API specification changes.

So next time you’ll want to keep these in mind when debugging fetch/proxy related issues:

- Look at the full request with all headers in the terminal

- Disable Cloudflare proxy temporarily to eliminate variables

- Purge Cloudflare cache if you have proxy active for code changes to take effect

- Use

arrayBuffer()when raw proxying - When using

text()fix the headers!